Welcome to my Robotics Portfolio!

I'm Max Rucker, a graduate student at the University of Michigan pursuing a Master’s in Robotics. Here, you will find some of the research work and personal projects I have worked on over the past four years. I am interested in working with autonomous vehicles and developing new machine-learning methods for SLAM.

Thanks for visiting—I hope you enjoy exploring my work!

Current Projects

SonarSplat: Novel View Synthesis of Imaging Sonar via Gaussian Splatting

Field Robotics Group, August 2024 - April 2025

Abstract - In this paper, we present SonarSplat, a novel Gaussian splatting framework for imaging sonar that demonstrates realistic novel view synthesis and models acoustic streaking phenomena. Our method represents the scene as a set of 3D Gaussians with acoustic reflectance and saturation properties. We develop a novel method to efficiently rasterize learned Gaussians to produce a range/azimuth image that is faithful to the acoustic image formation model of imaging sonar. In particular, we develop a novel approach to model azimuth streaking in a Gaussian splatting framework. We evaluate SonarSplat using real-world datasets of sonar images collected from an underwater robotic platform in a controlled test tank and in a real-world river environment. Compared to the state-of-the-art, SonarSplat offers improved image synthesis capabilities (+2.5 dB PSNR). We also demonstrate that SonarSplat can be leveraged for azimuth streak removal and 3D scene reconstruction.

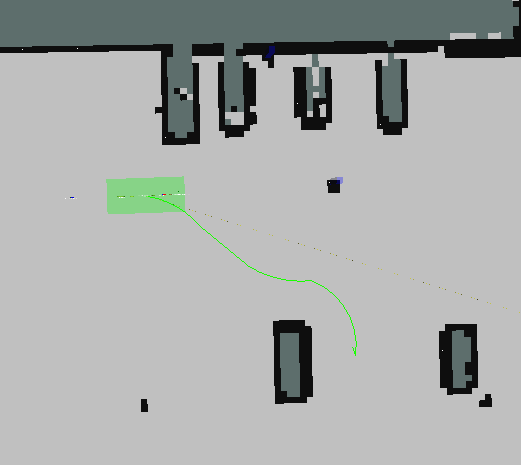

Autonomous Parking in an Unknown Dynamic Environment using CARLA

Mobile Robotics, January 2025 - April 2025

Abstract - This paper presents the development of an open-source autonomous car parking system designed for dynamic environments, addressing the gap in existing open-source solutions that often focus on holonomic path planning or simplified 2D scenarios. Unlike previous approaches, our system incorporates the non-holonomic constraints of the vehicle in a 3D scenario with moving pedestrians, providing a more realistic representation of real-world parking conditions. The system is implemented and tested in the CARLA simulation environment, with edge cases such as multiple static and patrolling pedestrians in the parking lot.

The code is publicly available for further development and research at https://github.com/mruck03/carla-startup. The project presentation is uploaded on Youtube at https://www.youtube.com/watch?v=ddkKPQPK3gE.

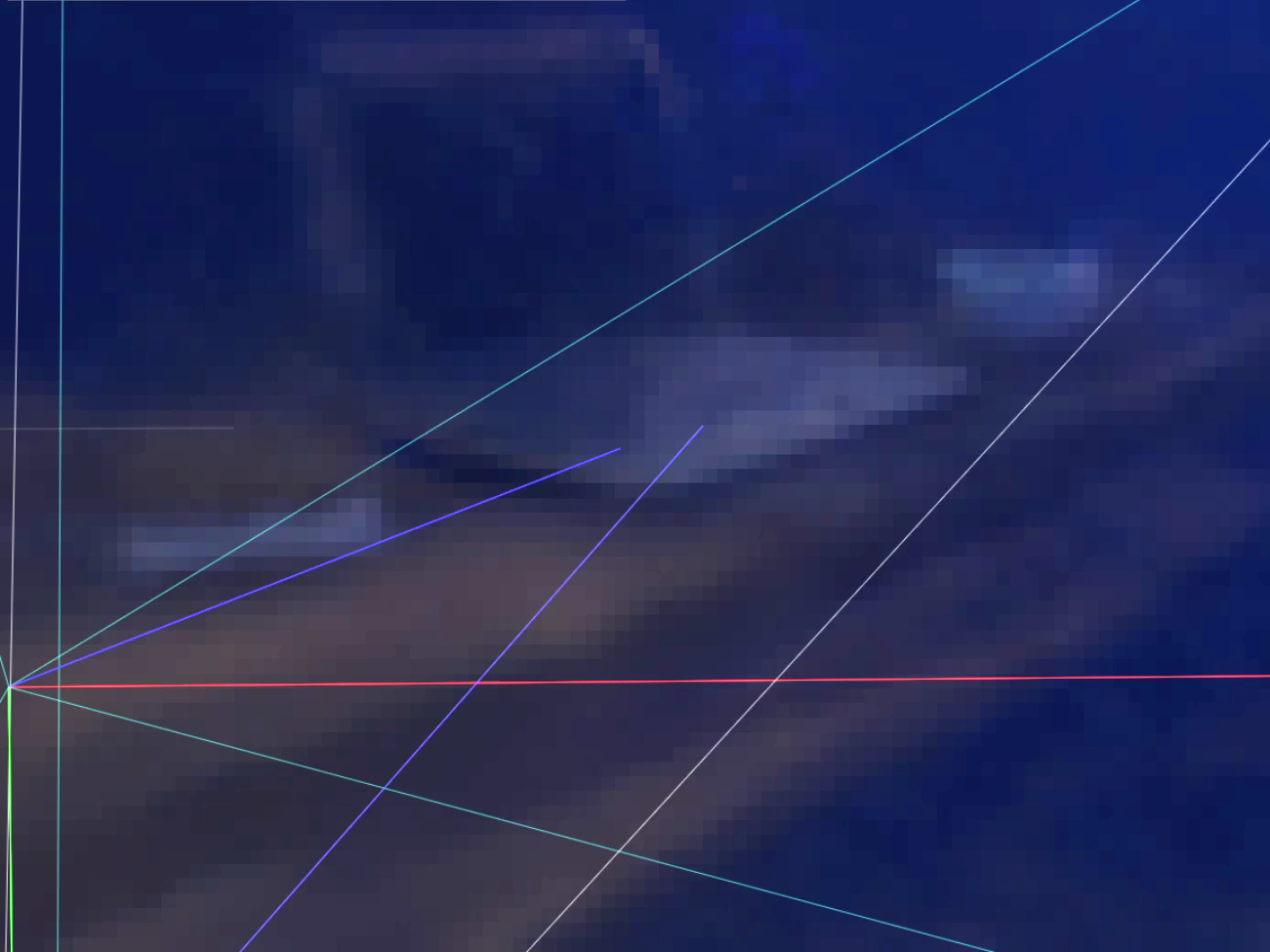

CelestiLoc - Visual Global Localization from Celestial Objects

3D Robot Perception, January 2025 - April 2025

Abstract - In this work, we present CelestiLoc, a vision-based framework for global localization that estimates the camera position from celestial objects. Our project identifies stars through plate-solving and utilizes a particle filter to reconstruct the camera's latitude and longitude. In addition, we detect the contrails of visible aircrafts and compare their position with ADS-B data projected onto the image as a ground truth. Our work shows the potential of using celestial objects for localization and aircraft tracking in GPS-denied situations.

Code for this project can be found at https://github.com/mruck03/CelestiLoc

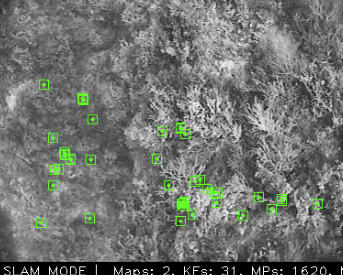

Underwater Visual Localization Benchmarking

Field Robotics Group, Dec 2023 - May 2024

As an undergraduate researcher in the Field Robotics Group, I did independent research on state-of-the-art visual localization and mapping algorithms and applying them to underwater settings. One overlooked aspect of underwater autonomy is navigating extreme environments such as caves and sunken shipwrecks. With the limited sensors available to autonomous underwater vehicles, leveraging advancements in visual localization and mapping could provide new capabilities to underwater autonomous vehicles. This research acts as a base for uncovering new ideas on how to apply and enhance visual localization specifically for underwater autonomous vehicles.

HydroNeRF - NeRF SLAM for Underwater Scene Reconstruction

ROB 572 - Marine Robotics, Dec 2023 - May 2024

Similar to my current research, I worked on a project for my marine robotics course developing a Neural Radiance Field (NeRF) deep learning model that accurately maps underwater scenes collected from an underwater autonomous vehicle. This NeRF algorithm is based on NeRF-SLAM and SeaThruNeRF. NeRF-SLAM uses visual slam to improve the rendering of NeRF scenes, and SeaThruNeRF helps account for attenuation and backscattering in underwater settings. This research hopes to easily create realistic underwater maps to aid in helping visualize underwater scenes that are explored by autonomous underwater vehicles.

Past Projects

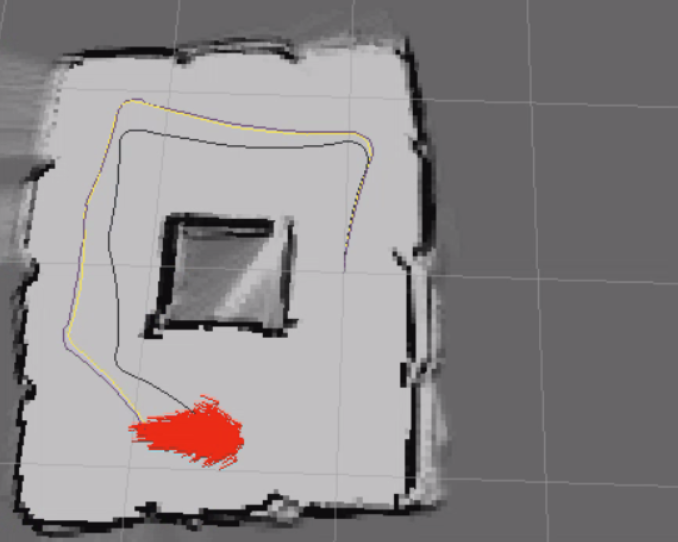

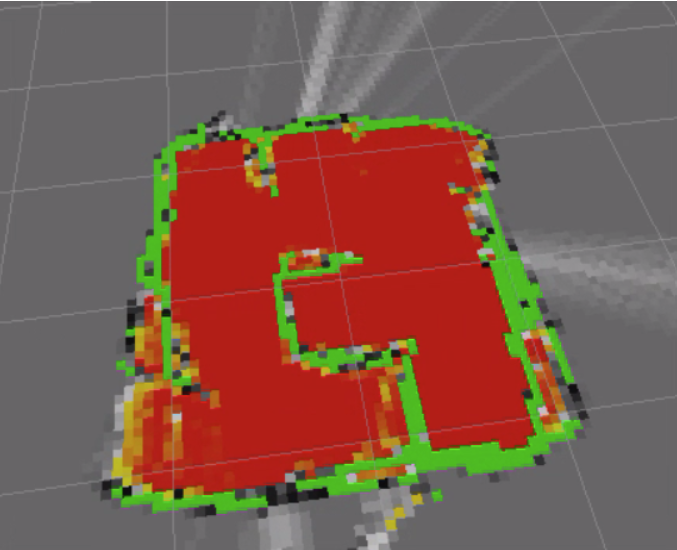

MBot Simultaneous Localization and Mapping

ROB 330 - Localization and Mapping, Oct 2023 - Dec 2023

For this project, I created an autonomous robot to test state-of-the-art SLAM algorithms in a closed maze using lidar and camera feed. For this, I developed and integrated multiple algorithms such as motor controllers, particle filters, sensor and action models, odometry, occupancy grids, frontiers, and A* path planning for a cohesive program that would explore unknown territory until no more frontiers were left. Overall, this project required a strong knowledge of varying systems within the robot and correctly configuring them to work together. This required a Jetson Nano with ROS to connect different Python and C++ code for data communication across hardware. Throughout this project, I worked in a group of three and learned how to collaboratively code through GitHub and code management tools. Resulted in successfully being able to map a 10x10 ft sized maze and navigate it while keeping accurate localization and mapping within 5% error.

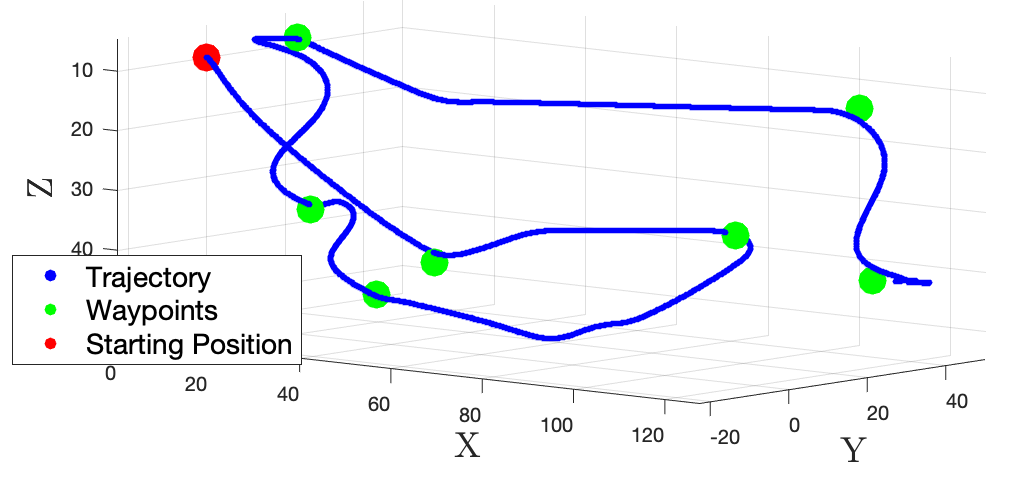

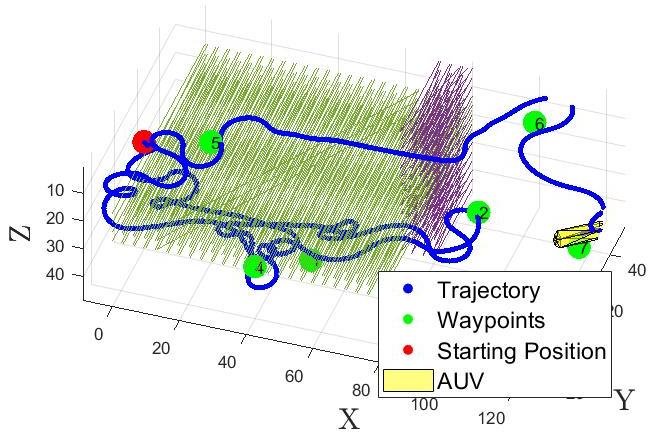

Autonomous Underwater Vehicle Simulation

ROB 498 - Autonomous Vehicles, Oct 2023 - Dec 2023

In this project, I worked with three graduate students to create a model simulation of the NPS AUV II from the Naval Postgraduate School. This project was driven by a desire to learn more about hydrodynamics and control models for underwater autonomous vehicles. In this project, we used Matlab to create the simulation and accurately model the AUV based on the hydrodynamic values provided by the research paper on the NPS AUV II. Along with this, we implemented our own PID controller to control the AUV and have it move to various waypoints given to it through the controller. Lastly, I expanded upon this project by creating a path-planning object avoidance algorithm for the AUV to maneuver around given objects in a simulated environment with the PID controller we implemented.

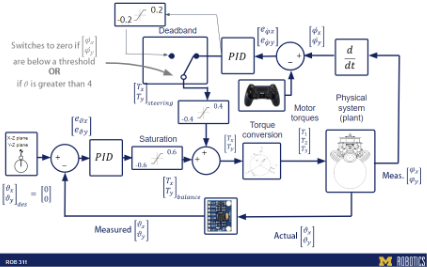

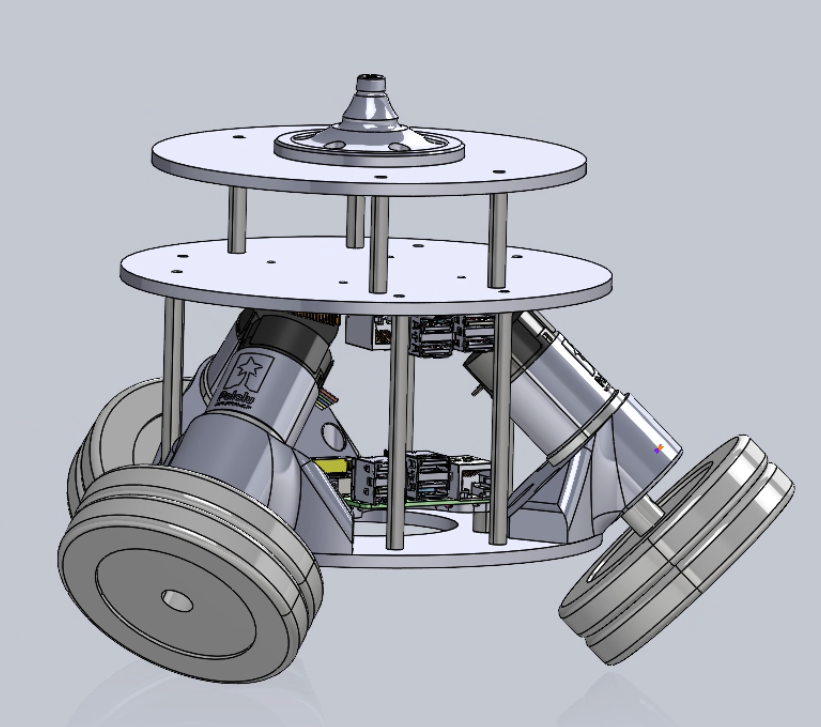

Self-Balancing Mobile Ball Robot

ROB 311 - How to Build and Make Robots, Oct 2023 - Dec 2023

In this project, I worked with another robotics major to create a self-balancing mobile ball robot completely from scratch. This required a lot of analysis of theoretical dynamics, planning for different sensors and motor selection, manufacturing parts from scratch, and programming the controller. This project required a breadth of knowledge of both hardware and software aspects of robotics. For our robot, we used Solidworks to 3D model each component to build our Ballbot. After manufacturing and assembling our Ballbot through 3D printing and laser cutting, we implemented control algorithms to control our Ballbot and do a series of tasks. This involved multiple PID controllers for both balancing and velocity control, as well as various saturation and other fine-tuning parameters for steady-state control of our robot. We also had to implement data communication from a Raspberry Pi for information processing and a pico board for motor control and sensor information. In the end, my team created a solid system that could balance for over 10 minutes on its own and could be controlled by a Bluetooth controller.